“Data scientist” means different things across companies. In practice, most roles sit somewhere between analytics (insights, experiments, dashboards) and machine learning (models that power product features).

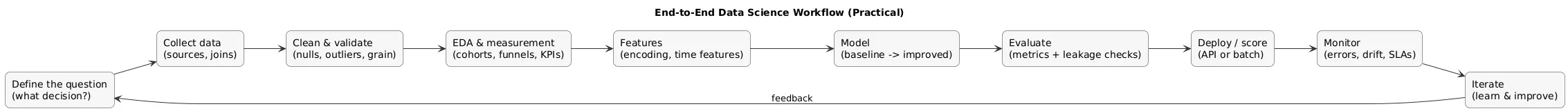

The fastest path to employability is not “more courses”. It is an end-to-end workflow: take messy data, turn it into reliable datasets, answer business questions, build (when appropriate) predictive models, and communicate trade-offs and limitations clearly.

Hiring signal

Recruiters do not hire “certificates”. They hire proof: projects that show problem framing, data cleaning, correct evaluation, and communication that stakeholders can act on.

1. What Data Scientists Actually Do (Day to Day)

Depending on the team, your week may include:

- Problem framing: aligning on the decision to improve (pricing, retention, conversion, fraud, etc.).

- Data discovery: source-of-truth tables, definitions, and data quality checks.

- EDA and measurement: segmentation, cohorts, funnel analysis, guardrail metrics.

- Experimentation: A/B testing, power thinking, interpreting results responsibly.

- Modeling: baselines → improved models → error analysis and model monitoring.

- Communication: concise summaries, dashboards, and recommendations with caveats.

End-to-end data science workflow (diagram)

Reality check

Many roles are 60–80% data cleaning, aligning on definitions, and stakeholder communication. That is not “less data science”—it is the part that makes the rest useful and trusted.

2. Choose Your Path: Role Types and Specializations

Pick a track based on what you enjoy and what local job descriptions ask for. Most beginners succeed faster by choosing an “entry-friendly” path, then specializing once the fundamentals are strong.

- Analytics / Product DS: KPIs, experiments, funnels, cohorts, stakeholder communication.

- Applied / ML-focused DS: predictive models, feature engineering, evaluation, monitoring.

- NLP / CV DS: language or vision systems; typically higher specialization expectations.

- Data engineering-leaning DS: pipelines, data quality, orchestration, analytics modeling.

Fastest entry for many candidates

Analytics-leaning roles: they reward strong SQL, correct measurement, and business understanding—skills you can prove quickly with projects.

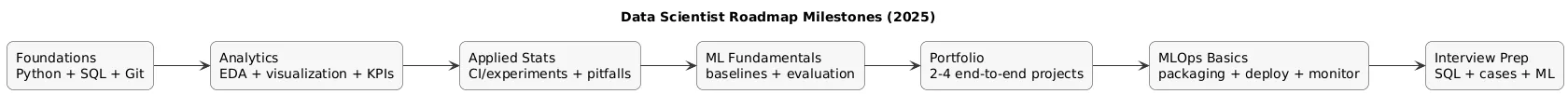

3. The 2025 Roadmap: milestones you can measure

Instead of a vague “study plan”, use milestones that produce tangible outputs. The goal is to repeatedly ship small, complete deliverables: cleaned datasets, reproducible notebooks, a simple model, a written summary, a dashboard, a small API.

Roadmap milestones (diagram)

Milestone 1: data handling fundamentals (you can ship this in days)

- Load a real dataset, run quality checks (nulls, duplicates, ranges, unexpected categories).

- Write 10 practical SQL queries (joins, aggregations, window functions).

- Publish a short “EDA + insights” notebook with 3–5 clear visuals and a conclusion.

Milestone 2: measurement and experiments (high demand in product teams)

- Define one KPI precisely (numerator/denominator, exclusions, time window).

- Build a cohort or funnel analysis and interpret changes responsibly.

- Write a mock A/B test readout: hypothesis, primary metric, guardrails, limitations.

Milestone 3: ML fundamentals (baseline-first)

- Train a baseline model (linear/logistic regression) and compare to a tree-based model.

- Show correct evaluation: train/test split or time split, cross-validation where appropriate.

- Document leakage checks and perform error analysis (where does it fail and why?).

Milestone 4: one “production-ish” artifact (light MLOps)

- Package the model (requirements, reproducible run).

- Expose it via a small API or batch scoring script.

- Add basic monitoring signals (errors, latency, data drift checks).

4. Foundations: Python, SQL, and Analytics Basics

If you are starting from zero, prioritize tools that let you work with real data quickly and repeatedly:

- SQL: SELECT, JOIN, GROUP BY, CTEs, window functions (ROW_NUMBER, LAG, SUM OVER).

- Python: pandas, NumPy, data cleaning, notebooks, basic plotting.

- Visualization: clarity over complexity; annotate key takeaways.

- Data modeling basics: facts vs dimensions, keys, grain, avoiding double-counting.

Minimum “job-ready” proof

One project that uses SQL to build a clean dataset and Python to analyze, visualize, and summarize results in a short, readable report.

5. Statistics You Need (Without Over-Studying)

Focus on applied stats that shows up in real work:

- Distributions: mean vs median, variance, skew, heavy tails, outliers.

- Sampling: bias vs variance, confidence intervals, representativeness.

- Hypothesis testing: p-values (interpretation), power thinking (conceptually), multiple comparisons awareness.

- Experiment metrics: conversion rate, lift, guardrails, novelty effects.

- Causality basics: correlation vs causation, confounding, Simpson’s paradox.

Practical approach

Learn stats inside projects (A/B-style analyses, cohort comparisons). You retain far more than from theory-only study.

6. Machine Learning Fundamentals (What to Learn First)

Start with ML concepts that appear in interviews and real projects:

- Supervised learning: regression and classification.

- Evaluation: cross-validation, time-based splits, avoiding leakage.

- Metrics: RMSE/MAE; precision/recall, ROC-AUC/PR-AUC; calibration basics.

- Baselines: linear/logistic regression, decision trees, gradient boosting (conceptually).

- Feature engineering: encoding, scaling, time features, handling missingness.

- Error analysis: segment performance, identify failure modes, propose fixes.

Most common failure

Data leakage. If your model sees future information or the same user appears in both train and test, evaluation becomes misleading.

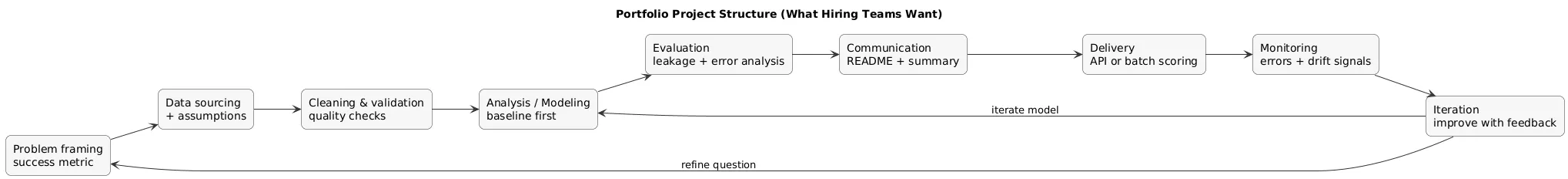

7. Portfolio Projects That Hiring Managers Respect

A strong portfolio is small and high-quality. Aim for 2–4 projects that demonstrate different skills and include a clean README. Each project should answer: “What problem did you solve, how, what did you learn, and what are the limitations?”

Project patterns that work

- EDA + storytelling: clear question, data cleaning notes, 5–8 visuals, executive summary.

- Predictive model: baseline, evaluation, leakage checks, error analysis, “next steps”.

- Time series / forecasting: seasonality, time split validation, realistic metrics.

- Applied MLOps: API or batch scoring + versioning + monitoring signals.

Portfolio structure (diagram)

What to include in every README

Problem statement, dataset source, cleaning decisions, evaluation method, results, limitations, and how to run the project end-to-end.

8. MLOps Basics: From Notebook to Production

You do not need deep MLOps to get hired, but basic “delivery literacy” is a strong differentiator. Your goal is to show you can make work reproducible and maintainable.

- Packaging: requirements.txt, environments, consistent runs.

- Reproducibility: deterministic pipelines, clear seeds, data version notes.

- Serving: batch scoring vs real-time API (choose based on latency and cost needs).

- Monitoring: latency/errors + data drift and model drift signals.

- Governance: auditability (which model version ran), approvals, access control.

If you want CI/CD context for deployment

See: CI/CD Pipeline Explained to understand promotion, verification, and safe releases for ML services.

9. Job Search Strategy (CV, LinkedIn, Networking)

Treat the job search like an experiment: hypotheses, iterations, measurable outputs.

- Target roles: match your portfolio to role keywords (analytics DS, applied DS, ML-focused DS).

- CV bullets: impact + method + scope (even if small).

- Networking: short, specific messages + one link to your best project.

- Job description mapping: find 5 repeated skills, then build explicit proof for them.

Strong project bullet (template)

“Built a churn prediction baseline (logistic regression + tree models), documented leakage checks, performed segment-based error analysis, and shipped a batch scoring script with simple monitoring signals.”

10. Interview Prep: SQL, Case Studies, and ML Questions

Most interviews evaluate three things:

- SQL: joins, aggregates, window functions, cohorts, and correctness under edge cases.

- Analytics case: define metrics, diagnose a change, propose experiments, explain trade-offs.

- ML basics: evaluation design, bias/variance intuition, leakage awareness, metric choice.

High ROI practice

Explain your projects out loud in 2–3 minutes: problem → approach → evaluation → result → limitation → next step. Communication is evaluated as heavily as technical correctness.

11. Your First 90 Days in a Data Science Role

Early success is usually about trust, reliability, and speed to useful outputs:

- Learn definitions: how your company defines key metrics and source-of-truth tables.

- Ship small wins: a metric fix, a dashboard improvement, a cleaned dataset with documentation.

- Reduce manual work: automate recurring analyses and build reusable notebooks or scripts.

- Make results reproducible: one command (or one notebook) to rerun the analysis.

12. Common Mistakes (And How to Avoid Them)

- Only taking courses: no proof. Fix: ship projects with READMEs and reproducibility.

- Weak SQL: slowed down by data access. Fix: practice joins, windows, and cohort queries.

- Over-theory early: no applied delivery. Fix: learn theory as needed inside projects.

- Bad evaluation discipline: credibility loss. Fix: baselines, leakage checks, error analysis.

- Unclear communication: insights not adopted. Fix: summaries, assumptions, limitations.

13. Roadmap Checklist

Use this as a practical milestone tracker:

- Tools: Python + pandas, SQL, Git, notebooks.

- Analytics: EDA, visualization, KPI definitions, cohorts/funnels.

- Stats: distributions, confidence intervals, experiments basics.

- ML: regression/classification, evaluation, leakage awareness, error analysis.

- Portfolio: 2–4 end-to-end projects with strong READMEs.

- MLOps basics: packaging, simple deployment (API/batch), monitoring signals.

- Interviews: SQL practice + analytics cases + ML fundamentals explanations.

Fast win

Pick one dataset and iterate: EDA → model → small deployment. One evolving, high-quality project often beats five shallow ones.

14. FAQ: Becoming a Data Scientist

Do I need a degree?

Not always. A degree can help, but many hiring decisions hinge on proof: portfolio quality, SQL fluency, correct evaluation, and clear communication.

How many projects are enough?

Usually 2–4 strong end-to-end projects. Depth and clarity matter more than quantity.

What should I learn first?

Start with Python and SQL, then layer statistics and ML through projects. You want usable skills quickly, not a year of theory before shipping.

How do I pick a specialization?

Read job descriptions in your market and choose the repeated skill cluster. Start general, then specialize once your fundamentals are stable.

Key data science terms (quick glossary)

- EDA

- Exploratory data analysis: profiling data quality, distributions, and relationships before modeling.

- Feature Engineering

- Creating or transforming inputs so models can learn useful patterns (encoding, scaling, time features).

- Data Leakage

- When training data contains information that would not be available at prediction time, inflating evaluation results.

- Train/Test Split

- Separating data for training and evaluation to estimate performance on unseen data.

- Cross-Validation

- Repeated training/validation on different folds to reduce sensitivity to one split.

- Precision / Recall

- Classification metrics balancing false positives (precision) and false negatives (recall).

- MLOps

- Practices for deploying, monitoring, and maintaining ML systems in production.

Worth reading

Recommended guides from the category.