Cloud cost optimization is not “pick a cheaper instance once.” It is a repeatable operating discipline: allocate spend to owners, control it with budgets and guardrails, and continuously reduce waste and unit cost.

The easiest way to reason about the bill is: Total cost ≈ Usage × Rate, plus “waste” caused by idle capacity, orphaned resources, and poor defaults. This guide shows what to fix first, how to do it safely, and how to keep savings from regressing.

Recommended companion

If you are migrating right now, also read Cloud Migration Step-by-Step. A strong landing zone and disciplined cutovers reduce cost surprises.

1. Why Cloud Costs Spike (Even in “Good” Migrations)

Cost spikes are usually operational, not mysterious:

- Over-provisioning: sized for peak, left running 24/7.

- Idle resources: “temporary” stacks that never expired.

- No ownership: resources exist without an accountable team.

- Egress: cross-region/cross-zone chatter or data leaving the cloud.

- Misfit managed services: great defaults used at the wrong scale or with wrong retention.

Pattern you should stop early

If engineers cannot explain why costs changed week-over-week, you do not have FinOps yet—only billing.

2. FinOps Basics: Visibility, Control, Optimization

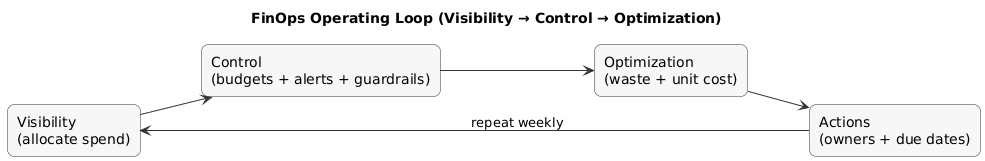

FinOps works in three loops. Run all three continuously; skipping one creates churn:

- Visibility (allocation): map spend to owner/service/environment.

- Control: budgets, anomaly alerts, and guardrails that prevent runaway spend.

- Optimization: reduce waste (idle) and reduce unit cost (rate/architecture).

FinOps operating loop (diagram)

FinOps success looks like

Every resource has an owner, budgets alert the correct team, and weekly cost reviews generate engineering actions (delete/downsize/schedule/optimize) with due dates.

3. Quick Wins You Can Do in 48 Hours

If you need savings fast, focus on changes that are (a) low risk, and (b) repeatable across accounts/projects:

- Enable budgets + alerts per environment (prod vs non-prod) and for the whole org.

- Turn on anomaly detection and route alerts to owners (not only finance).

- Delete/stop zombies (stale dev stacks, unused test DBs, abandoned clusters).

- Clean orphaned storage (unattached volumes, old snapshots)—after dependency validation.

- Restrict high-cost resources (policy/role gating) until tagging is enforced.

- Schedule non-prod off-hours (even a simple night/weekend policy saves repeatedly).

Safety rule

Never delete storage until you can answer: “Who owns it, what depends on it, what is the retention policy, and can we restore a representative dataset?”

4. Tagging & Allocation: Make Every Euro/Dollar Accountable

Allocation is the foundation. Without it, optimization is guesswork and political arguments. Use a minimal tagging standard and enforce it on creation (via policy and/or IaC modules).

Minimum recommended tags:

- owner (team accountable for cost + reliability)

- service (application/component)

- environment (prod/staging/dev)

- cost_center (finance mapping)

- expires_on (strongly recommended for non-prod / temporary stacks)

Example tagging policy (simple intent)

- Block creation of high-cost resources without owner/service/environment tags

- Auto-apply environment from account/subscription if possible

- Require expires_on for non-prod resources unless explicitly exemptedPractical rule

If a resource is untagged, treat it as unowned. Unowned resources should be scheduled for cleanup by default.

5. Budgets, Alerts & Anomaly Detection

Budgets are an engineering control. Configure them so they trigger action early, not after the bill arrives:

- Budgets per environment: prod and non-prod separately (different tolerance).

- Threshold alerts: e.g., 50%, 80%, 100% monthly.

- Anomaly alerts: day-over-day and week-over-week deviations.

- Routing: alerts go to the owning team (Slack/email/on-call), not only finance.

Mature pattern: tie alerts to a runbook—what to check first (top movers, new resources, egress spikes), and which mitigations are safe (scale down non-prod, stop new deployments, revert a change).

6. Eliminate Waste: Idle Compute, Zombie Resources, Orphaned Storage

Waste is spend that delivers no business value. A reliable workflow: Top spenders → owner confirmation → last-used signal → action.

Common waste categories:

- Idle compute: always-on servers with low utilization.

- “Zombie” stacks: old test deployments, forgotten preview envs.

- Orphaned storage: unattached disks, snapshot sprawl, log retention without limits.

- Networking leftovers: idle gateways/LBs, unused IPs, cross-zone traffic.

High-leverage starting point

Pick the top 20 resources by monthly cost. You will usually find 60–80% of “easy waste” there.

7. Rightsize Compute (Without Breaking Performance)

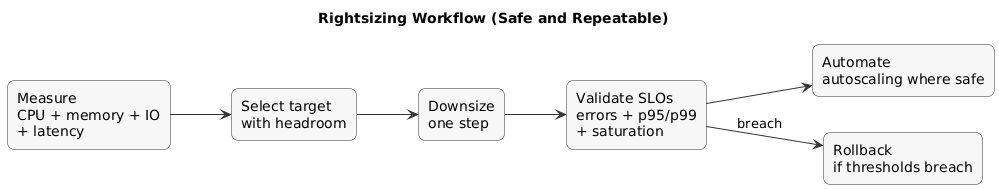

Rightsizing is safe when you treat it like a performance change with rollback. Use metrics (CPU, memory, IO, latency) and change in small steps.

Rightsizing workflow (diagram)

- Measure typical peak (not only averages) and identify the constraint (CPU vs memory vs IO).

- Select target with headroom (commonly 30–50% for typical peak, depending on workload).

- Change gradually (one step down) and monitor SLO indicators (errors, p95/p99 latency, saturation).

- Rollback if SLO indicators breach thresholds.

- Automate with autoscaling where it is safe and predictable.

Common failure mode

Rightsizing based on CPU alone breaks memory-bound workloads. Always inspect memory and disk/network IO.

8. Schedule Non-Prod Environments (The Easiest Recurring Savings)

Non-prod environments are the easiest recurring savings because they are often overbuilt and underused. Practical options:

- Night/weekend shutdown (or scale-to-zero) for dev/staging.

- Smaller defaults for non-prod (instance/DB/storage tiers).

- Ephemeral preview environments (auto-delete after merge).

- TTL tagging (expires_on) with automated cleanup jobs.

9. Storage Optimization: Lifecycle, Tiering, and Cleanup

Storage costs compound quietly. You want explicit retention and lifecycle rules:

- Lifecycle policies: move older objects/logs to cheaper tiers automatically.

- Retention limits: delete logs/snapshots after X days unless exempted.

- Compression and sampling: reduce log volume without losing signal.

- Backup hygiene: fewer, meaningful restore points; test restores regularly.

10. Data Transfer & Egress: The Silent Budget Killer

Egress spikes are usually architectural: chatty services across zones/regions, analytics exports, CDN misconfiguration, or heavy telemetry out of region.

- Keep chatty components close (same region/zone where possible).

- Use caching/CDN for repeatable payloads.

- Compress responses and batch transfers.

- Audit cross-region replication and only replicate what you must.

11. Commitments & Discounts: Reservations, Savings Plans, CUDs

Commitments reduce the rate for steady usage. Treat them as a portfolio decision: only buy commitments when you trust your baseline and have owners who sign off on the term.

- Reserved capacity / instances: best for stable, long-lived workloads.

- Savings plans / flexible commitments: useful when you want rate reduction with more flexibility.

- CUDs: similar concept—commit to usage to get lower pricing.

12. Spot/Preemptible + Autoscaling (High Leverage for Stateless Work)

For stateless tiers and batch processing, spot/preemptible can be a major lever—if you design for interruption: retries, idempotency, graceful shutdown, and state stored outside nodes.

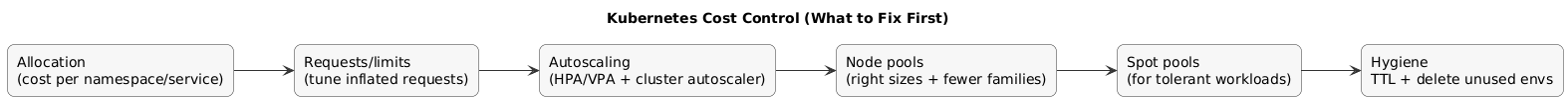

13. Kubernetes Cost Optimization (Clusters, Nodes, and Requests)

Kubernetes costs balloon when requests/limits are undisciplined and baseline node capacity never scales down. Your first targets:

- Allocation: show cost per namespace/service/team.

- Requests/limits tuning: reduce inflated requests that force overprovisioning.

- Autoscaling: HPA/VPA where appropriate, and cluster autoscaler for nodes.

- Node pool discipline: fewer instance families, right-sized pools, spot pools for suitable workloads.

- Garbage collection: expire preview envs, remove unused namespaces and volumes.

Kubernetes cost control (diagram)

14. Architecture Choices That Reduce Unit Cost

Some savings come from design, not just tuning: caching, async processing, right managed service choices, and removing noisy cross-zone traffic. Track at least one unit metric: cost per 1k requests, per job run, per GB processed, or per active customer.

15. Governance & Guardrails That Prevent Surprise Bills

The cheapest optimization is preventing waste from being created. Strong guardrails are specific, automated, and reviewable:

- Policy-as-code: enforce tags, encryption, and restrictions for expensive services.

- IaC + code review: infrastructure changes reviewed like application code.

- Golden templates: approved modules with safe defaults (limits, retention, logs).

- Quotas/limits: prevent “accidental scale” in non-prod.

- Alert runbooks: every cost alert maps to a checklist and an owner.

Predictable root cause

Surprise bills happen when expensive resources can be created quickly without tags, review, or alerts. Fixing governance prevents repeat incidents.

16. A Simple Weekly Cost Review Rhythm

- Top movers: what increased most week over week (owner must explain).

- Top waste candidates: idle compute, orphaned storage, unused environments.

- Actions: 5–10 remediation items with owners and due dates.

- Commitments: utilization check for reservations/savings plans/CUDs.

- Unit cost: track one metric tied to product usage.

17. Cloud Cost Optimization Checklist

- Allocation: mandatory tags + showback/chargeback reports.

- Control: budgets, thresholds, anomaly alerts, and routing to owners.

- Waste: cleanup process for idle/zombie/orphaned resources.

- Compute: rightsizing + autoscaling + scheduling non-prod.

- Storage: lifecycle + retention + restore tests.

- Egress: audit major transfer paths and reduce cross-region chatter.

- Commitments: only after stable baseline + owner sign-off.

- K8s: allocate by namespace, tune requests/limits, scale nodes down.

- Governance: IaC + policy-as-code + golden templates.

- Rhythm: weekly review with actions and accountability.

18. FAQ: Cloud Cost Optimization

What is FinOps in simple terms?

FinOps is shared accountability for cloud spend: engineers and finance align on allocation, control, and continuous optimization.

What are the fastest cost optimization wins?

Budgets/alerts, strict tagging, deleting idle resources, scheduling non-prod, and cleaning orphaned storage after validation.

Are commitments always worth it?

Only for steady usage you trust. If you are still migrating or usage is volatile, prioritize autoscaling and reduce waste first.

Why does Kubernetes get expensive?

Inflated requests/limits, oversized baseline nodes, and environments that never scale down. Fix allocation + scaling + hygiene.

How do I prevent surprise bills?

Mandatory tagging and owners, budgets + anomaly detection, guardrails restricting expensive resources, IaC + review, and weekly cost reviews.

Key cloud terms (quick glossary)

- FinOps

- A practice for managing cloud cost with shared accountability across engineering, finance, and product.

- Rightsizing

- Adjusting compute/DB/storage size to real usage with safe headroom.

- Autoscaling

- Automatically scaling capacity up/down based on demand signals.

- Commitment Discounts

- Lower pricing in exchange for committing to usage/spend over a term.

- Spot / Preemptible

- Discounted capacity that can be interrupted; best for fault-tolerant work.

- Egress

- Data transferred out of the cloud (often a hidden cost driver).

- Unit Cost

- Cost per business outcome (e.g., cost per 1k requests or per job run).

Worth reading

Recommended guides from the category.