Prompts are production assets. When you change a prompt without an evaluation loop, you’re effectively deploying untested logic. The result is predictable: formatting regressions, policy drift, degraded accuracy, and unexpected tool-calling failures.

This guide gives you a repeatable framework to test, compare, and version prompts so you can ship improvements without breaking existing behavior. You’ll get practical templates you can copy into your repo and a checklist to keep releases safe.

Core principle

Treat prompts like code: deterministic inputs, measurable outputs, regression checks, version control, and safe rollout.

1. Why prompts need testing

Prompt changes can look “minor” while causing major downstream breakage. A single sentence can change: output structure, refusal behavior, citation style, verbosity, or tool calling patterns.

- Hidden coupling: prompt changes can break downstream parsers and tool schemas.

- Non-determinism: sampling means behavior can drift unless you measure distributions.

- Safety and compliance: “good enough” outputs can still violate policy boundaries.

- Cost and latency: better prompts reduce retries and tokens—if you track it.

- User trust: inconsistent answers and missing citations destroy credibility.

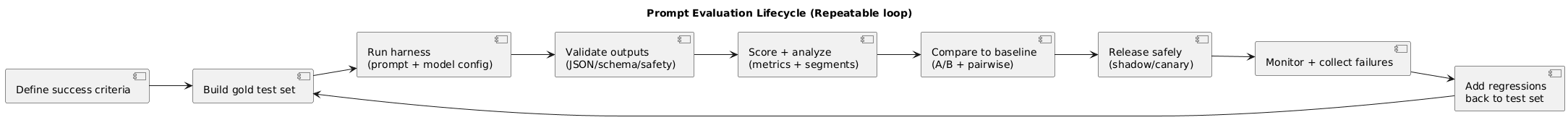

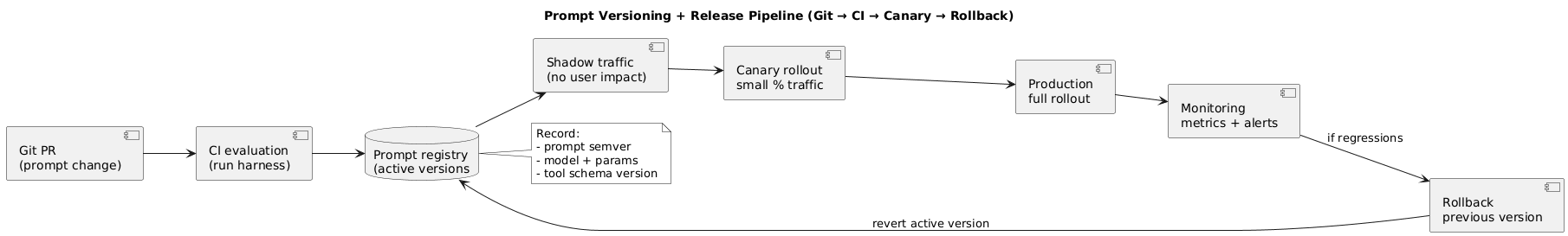

Prompt evaluation lifecycle (diagram)

2. Define success criteria

Start by defining what “good” means for your task. “Better” is not a metric. Your criteria should be explicit, testable, and aligned with what users expect.

| Dimension | What you measure | Typical pass/fail check |

|---|---|---|

| Correctness | Answer is accurate and completes the task | Rule-based checks + human rubric |

| Format compliance | JSON validity, schema adherence, required fields present | Strict parser + schema validator |

| Safety | Correct refusal and safe alternatives for disallowed asks | Safety test cases + refusal correctness |

| Grounding | Citations present and relevant (if required) | Citation rate + relevance spot-check |

| UX | Clarity, tone, structure, helpfulness | Human rubric with anchors |

Common failure mode

Teams measure only “overall quality” and miss critical regressions like broken JSON. Always separate hard gates (format/safety) from soft scores (style).

3. Build a gold test set

Your test set should represent reality, not a clean demo. A good set includes typical requests, tricky edge cases, and adversarial inputs you expect to see in production.

- Typical: the most common user intents and formats.

- Hard edge cases: ambiguity, missing details, conflicting constraints, long inputs.

- Policy-sensitive: unsafe asks and borderline requests to test refusal correctness.

- Regression cases: failures you’ve seen in production (add them immediately).

- Segments: group cases by intent (summarize, extract, classify, tool-call, etc.).

Test set hygiene (what keeps it useful)

- Stable IDs: every test case has an id so you can track fixes and failures over time.

- Expected behavior: either a reference output or a rubric and hard rules.

- Ownership: someone is responsible for adding new regressions and pruning duplicates.

- Coverage map: you can see what the test set does and does not cover.

4. Metrics that actually help

Avoid relying on a single “judge score.” You want a small set of metrics that explain failures and guide iteration. Most teams succeed with a combination of hard pass rates and task scores.

- Task success rate: % of cases meeting acceptance criteria.

- Format pass rate: valid JSON / schema compliance / required fields present.

- Refusal correctness: correct refuse vs incorrect comply for safety cases.

- Grounding rate: citations present and relevant (when required).

- Cost and latency: tokens, retries, tool calls, and response time.

Practical metric strategy

Start with 2–3 hard gates (format + refusal correctness + tool schema), then add 1–2 task metrics. Expand only when you can act on the result.

5. Build a prompt test harness

A test harness makes evaluation repeatable. It runs a known test set against a specific prompt version and model config, stores outputs, runs validators and scorers, then produces a report you can compare to a baseline.

Harness architecture (diagram)

Minimum fields you should log

- Prompt version: the template id and semver (e.g., 2.3.1).

- Runtime config: model, temperature, top_p, max tokens, tool schema version.

- Inputs: test case id, user input, context payload (if applicable).

- Outputs: raw output, parsed output, validation errors.

- Scores: pass/fail gates and rubric scores with notes.

- Ops: latency, tokens, retries, tool-call counts.

Harness flow (conceptual)

1) Load test cases (gold set)

2) Run each case N times (sampling) or 1 time (deterministic mode)

3) Parse + validate (JSON/schema/tool calls)

4) Score (rules + rubric)

5) Compare baseline vs candidate

6) Produce a report + artifacts (failures, diffs, logs)6. Compare prompts (A/B + pairwise)

Comparison is where evaluation becomes decision-making. You are not looking for “perfect” prompts; you are looking for prompts that improve key metrics without harming guardrails.

- A/B: same test set, two prompt versions, compare metric deltas.

- Pairwise judging: pick which output is better per case using a rubric (humans or a controlled judge).

- Segment analysis: compare results by case type (format, safety, edge, domain).

- Failure diffing: highlight what changed (missing field, tone shift, tool call drop-off).

Comparison trap

If you compare only averages, you’ll miss critical regressions in small but high-risk segments. Always report per segment and list top failures.

7. Regression testing strategy

Regression testing answers one question: “Did this change make anything important worse?” Define blockers and guardrails up front so releases are predictable.

Recommended guardrail categories

- Blockers (must never regress): schema/JSON pass rate, safety failures, tool-call validity.

- Guardrails (small drift allowed): verbosity, style, minor formatting differences.

- Targets (want to improve): task success, grounding, cost/latency, user satisfaction.

Practical rule

Keep a “regression pack” of the worst historical failures and run it on every change. This gives you fast signal even before you run the full suite.

8. Human evaluation rubrics

Humans are still the best judge of clarity and usefulness, but only when you give them a rubric with anchors. Without anchors, scores become inconsistent and hard to interpret.

| Criterion | 1 (Fail) | 3 (OK) | 5 (Excellent) |

|---|---|---|---|

| Correctness | Incorrect or unsafe | Mostly correct; minor issues | Correct and complete |

| Clarity | Hard to follow | Readable but uneven | Clear structure; easy to apply |

| Format | Breaks required format | Minor formatting issues | Perfect compliance |

| Safety | Wrong refusal/comply | Mostly safe; some risk | Safe with good alternatives |

Most valuable feedback

The best human notes explain why the output failed: missing constraint, wrong assumption, unclear step, or unsafe suggestion. Numbers without “why” do not improve prompts.

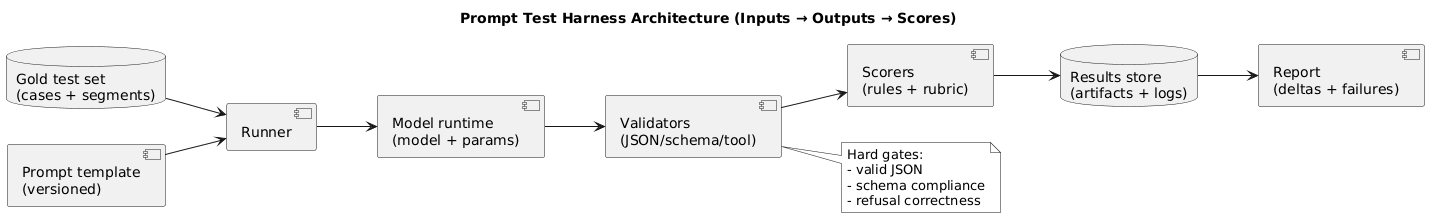

9. Versioning and change control

Prompt versioning is not just a file name. A prompt is reproducible only when you also version the runtime config: model, parameters, tool schemas, and any system policy text you inject.

Recommended versioning practice

- Store prompts in Git as plain text templates.

- Semantic versioning: MAJOR (format/policy changes), MINOR (behavior improvements), PATCH (small clarifications).

- Changelog: what changed, why, and expected impact.

- Promotion gates: automated evals required before merging/releasing.

- Prompt registry: track which prompt version is active per environment (dev/stage/prod).

10. Safe rollouts and monitoring

Even if a prompt passes offline evaluation, production traffic can reveal new failure modes. Use staged rollout so you can detect issues early and roll back quickly.

Release pipeline (diagram)

Staged rollout steps

- Shadow mode: run the candidate prompt in parallel (no user impact) to collect metrics.

- Canary: route a small % traffic and compare against baseline.

- Monitoring: parse failures, refusals, user feedback, escalation rate, latency/cost.

- Rollback: instant revert to prior prompt version (keep rollback paths simple).

Safety note

If your prompt handles policy-sensitive requests, treat refusal correctness like a production SLO. A small regression can create outsized risk.

11. Templates & examples (copy/paste)

11.1 Test case template

{

"id": "format_json_012",

"segment": "format",

"input": "Extract the fields and return valid JSON with keys: name, email, priority.",

"required_checks": ["valid_json", "schema_v3", "no_extra_text"],

"notes": "Regression: model sometimes adds commentary outside JSON"

}11.2 Acceptance criteria template

Acceptance criteria (example)

- Must return valid JSON (no markdown fences)

- Must include keys: name, email, priority

- Must not include extra keys

- Must not include text outside JSON

- Latency under 2.5s p95 (optional)11.3 Prompt change log entry

Prompt v2.4.0

- Added: explicit "return JSON only" instruction

- Changed: tool-call schema references updated to v3

- Expected impact: higher format pass rate; slightly higher tokens

- Guardrails: refusal correctness must not regress; latency +10% max12. Prompt QA checklist

- Goal: success criteria defined and measurable.

- Test set: includes typical + edge + adversarial + regressions.

- Hard gates: JSON/schema/tool-call validity enforced.

- Safety: refusal correctness explicitly measured.

- Comparison: baseline vs candidate metrics + segment breakdown.

- Versioning: Git + semver + changelog + runtime config recorded.

- Rollout: shadow + canary + monitoring + rollback plan ready.

13. FAQ: prompt evaluation

What is prompt evaluation?

Prompt evaluation is the process of testing a prompt against a representative set of inputs and scoring outputs for correctness, formatting, safety, and usefulness. The goal is to compare versions and prevent regressions.

What should be in a prompt test set?

Include typical user requests, hard edge cases, ambiguous inputs, policy-sensitive cases, and failure modes you’ve seen in production. Keep expected outputs or scoring rubrics next to each test.

Which metrics are most useful for prompts?

Use task-specific success rates (correctness), format compliance, citation/grounding rate (if applicable), refusal correctness for safety cases, and latency/cost. Avoid relying on a single score.

How do I version prompts properly?

Treat prompts like code: store them in Git with semantic versions, record model + temperature + tool schema, track changes in a changelog, and require automated evals before promotion.

How do I roll out a new prompt safely?

Use staged rollout: shadow tests, canary traffic, monitoring, and rollback. Compare outcomes across versions and freeze promotion if key metrics regress.

Key terms (quick glossary)

- Gold test set

- A curated set of representative inputs used to evaluate prompt quality and prevent regressions.

- Regression test

- A test that ensures a change doesn’t break previously working behavior.

- Format compliance

- Whether the model output matches a required structure (for example: strict JSON or a tool schema).

- Rubric

- A scoring guide with clear criteria and anchors for human evaluation.

- Canary rollout

- Gradually releasing a change to a small fraction of traffic to reduce risk.

- Prompt version

- A specific prompt template plus its runtime configuration tracked for reproducibility.

Worth reading

Recommended guides from the category.